From secure to trusted: Why AI needs a human lens

This week, I had the privilege of presenting alongside Holly Wright, an AI and Cyber Software Engineer at IBM, at a joint event hosted by the Australian Women in Security Network (AWSN) and Suncorp. Our talks tackled one of the most urgent challenges in artificial intelligence: the widening gap between secure and trusted systems. In this article, you’ll explore why even the most technically robust AI systems can fail in practice—and why trust, not just technology, will determine whether people actually use them.

Three Key Insights

1. Position AI as a Collaborator, Not a Controller

AI should support—not replace—human judgment. Adoption grows when AI is framed as a partner that augments decision-making, not an authority that overrides it.

2. Trust Grows Through Experience

Trust can’t be engineered into a product overnight. It builds gradually, through lived experience, reflection, and feedback. Early adoption strategies must create space for this learning.

3. Organisational Signals Matter

Trust doesn’t reside in the algorithm alone. It’s shaped by how AI is introduced and supported across the organisation. Leaders and change agents play a pivotal role in setting expectations, building psychological safety, and aligning AI with organisational values.

In short: trust doesn’t just live in the product—it lives in the environment.

Securing Large Language Models

Holly delivered a sharp, timely presentation on prompt injection attacks, one of the most critical vulnerabilities affecting large language models (LLMs). Drawing from the paper Ignore This Title and HackAPrompt: Exposing Systemic Vulnerabilities of LLMs through a Global Scale Prompt Hacking Competition, she showed how even cutting-edge generative models can be manipulated with surprisingly simple inputs.

These risks are not theoretical. As Holly noted, this is the new frontline of cybersecurity—and it’s developing fast. What struck me most during her session was the sheer creativity of the hackers. The lateral thinking involved is both impressive and, frankly, a little terrifying.

Trusted Artificial Intelligence

In my session, I focused on a different but deeply connected issue: even if an AI system is secure, it doesn't guarantee that it will be trusted. And if it's not trusted, it won't be adopted.

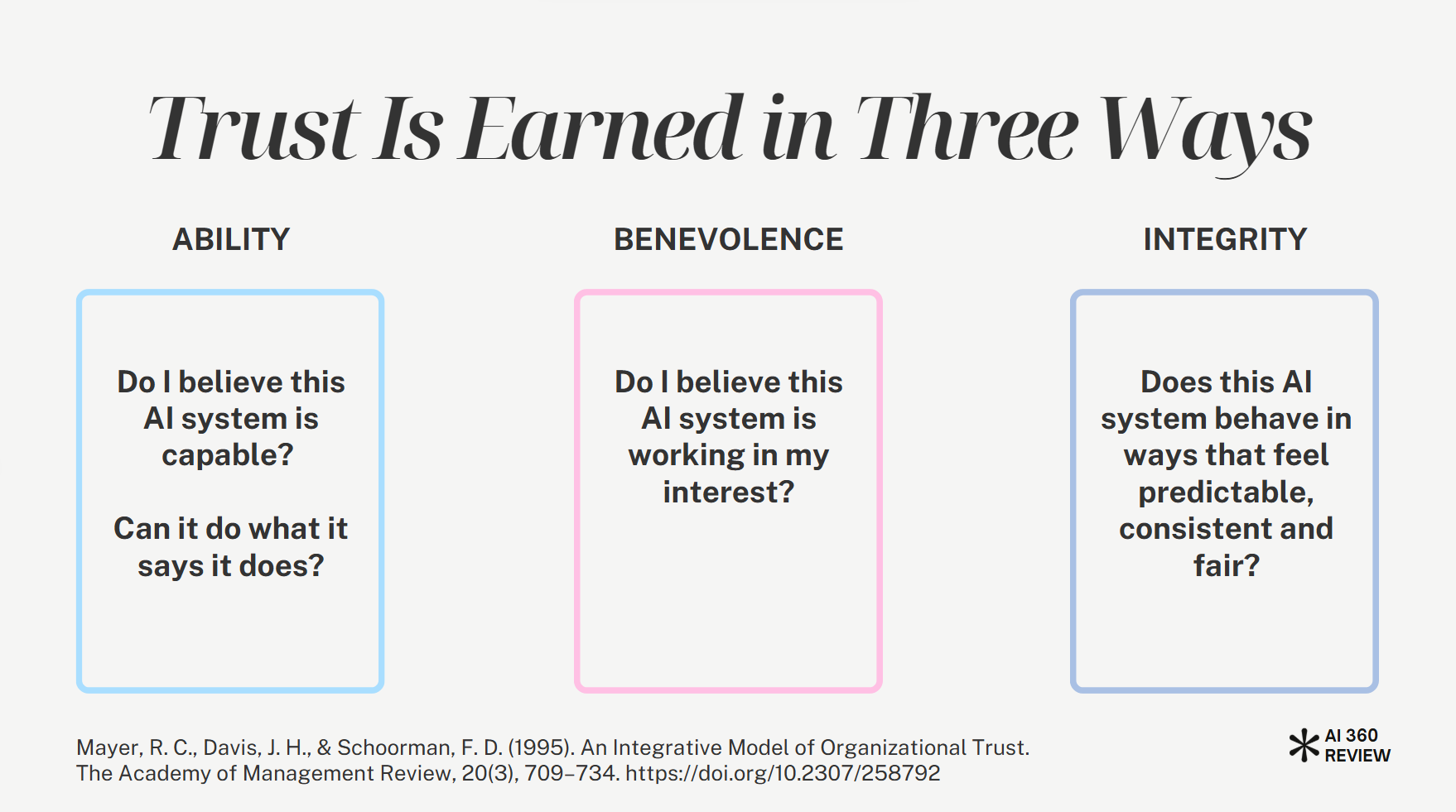

Using Mayer, Davis, and Schoorman’s model of organisational trust, I explained that trust is built on more than ability. It also relies on how people perceive the AI system’s benevolence and integrity—whether it acts in their interests and behaves in consistent, fair, and predictable ways.

To everyone who attended—thank you. The thoughtful questions, generous conversation, and collaborative energy from the AWSN and Suncorp Cyber teams made the morning deeply rewarding. Special thanks to Holly Wright for surfacing the risks we cannot afford to ignore, and to the Cyber Education and Awareness team at Suncorp for making events like this possible.

The button will take you to a subscribe link, where you will get more tools to help you lead in AI. You are welcome to unsubscribe at any time.

How the AI360 Review supports people-centred AI adoption

At AI360 Review, we help executive teams move beyond the myth that security is enough. We work with you to assess where trust gaps exist, and how to build the human capability required for strategic, responsible AI adoption.

If you're navigating AI transformation, the AI360 Review offers a clear, people-centred path forward.